Michael Dinh

Oracle Is Guilty Until Proven Innocent

Received email from Technical Lead | Senior Manager for the following errors.

Error Description: 0: Invalid pool name ‘oraclePool’ while getting a database connection.

Please check for consistency of the properties files or BPML

Time of Event: 20240419141429

Workflow Id: 88867

First inclination is to check Oracle database parameters (sessions and processes) which wasted time on a wild goose chase.

I am by no mean an expert but Google is your friend.

It puzzle me how a Technical Lead | Senior Manager does not know how to Google.

LMGTFY – Let Me Google That For You for all those people who find it more convenient to bother you with their question rather than to Google it for themselves.

Oracle OEM Read Only Access

With great power comes great responsibility.

Reference: https://en.wikipedia.org/wiki/With_great_power_comes_great_responsibility

On boarding 4 Database Consultants and they have request access to OEM. Sharing SYSMAN password with every DBA is not a good idea and also difficult to determined who messed up.

Here are 2 articles and I favor Doc ID 2180307.1 based on last update and contains screenshots.

OEM 13c How To Create an Administrator with Read Only Access (Doc ID 2925232.1)

Enterprise Manager Base Platform – Version 13.4.0.0.0 and later

Last Update: Feb 1, 2023

EM 13c : How to Create an EM Administrator with Read Only Access to the Performance Pages of a Database Target? (Doc ID 2180307.1)

Enterprise Manager for Oracle Database – Version 13.1.1.0.0 and later

Last Update: May 9, 2023

Would have been nice to have emcli script to do this but beggars cannot be choosers.

Zero-Sum Game

Zero-sum is a situation, often cited in game theory, in which one person’s gain is equivalent to another’s loss, so the net change in wealth or benefit is zero.

A zero-sum game may have as few as two players or as many as millions of participants.

Reference: Zero-Sum Game Definition in Finance, With Example

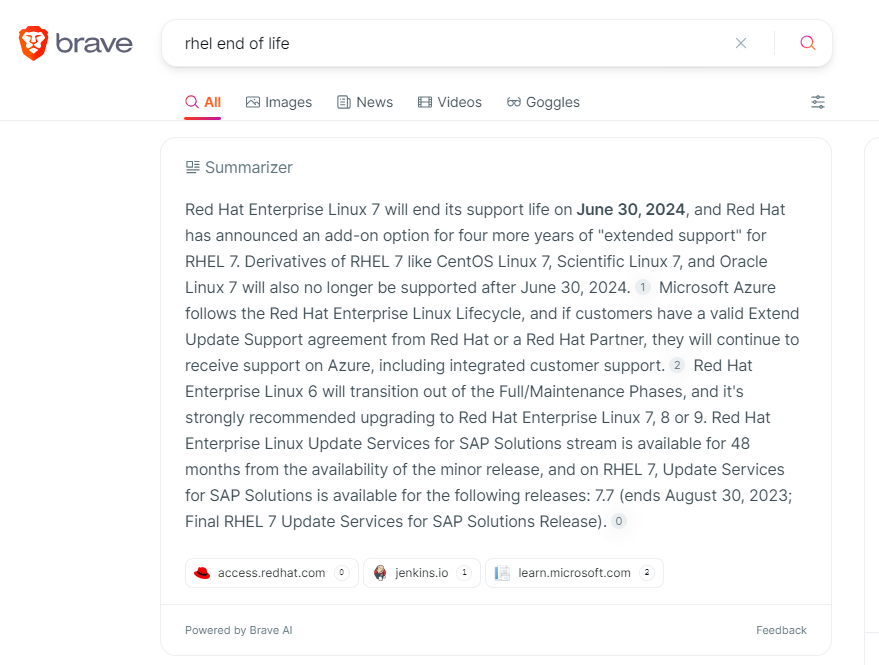

This is what the database team is facing because system team is upgrading operating system to Red Hat Enterprise Linux 7 which will reach its end of life on June 30, 2024 (2 months later).

Here are some Oracle Docs with may be helpful.

IMPORTANT: Need to relink GI and DB

There is one step missing and do you know what it is? Keep in mind, not all environments are the same.

How To Relink The Oracle Grid Infrastructure Standalone (Restart) Installation Or

Oracle Grid InfrastructureRAC/ClusterInstallation (11.2 to 21c).

Doc ID 1536057.1

Relinking Oracle Home FAQ ( Frequently Asked Questions)

Doc ID 1467060.1

Executing “relink all” resets permission of extjob, jssu, oradism, externaljob.ora

Oracle Database – Enterprise Edition – Version 10.2.0.3 and later

Doc ID 1555453.1

The Best vs. The Rest

CAUTION: whine being served next.

Currently, Oracle database version 11.2.0.4 is running on RHEL 6.10 and database version 12.1.0.2 is running on RHEL 6.10, 7.1, 7.5.

System administrators decides to upgrade OS to RHEL 7.9 which will be EOL.

Basically, ZERO sum gain.

In addition, there are plans to migrate away from Oracle RDBMS and to Non-Oracle cloud.

I am not the sharpest tool in the shed, but isn’t this like putting lipstick on the pig before the pig get slaughter?

Common Sense

I am often reminded of of my experience at 24 Hour Fitness24 Hour Fitness from 2005.

Just purchased Oracle Wait Interface: A Practical Guide to Performance Diagnostics & Tuning and a consultant (we call him Dibs) tells me to throw the book away.

Book is not going to help you if you don’t have commons sense.

SQL Server Gateway (/tmp 100% FULL)

Trouble Shooting :=)

hostname:/home/oracle$ df -h /tmp

Filesystem Size Used Avail Use% Mounted on

/dev/mapper/vg01-tmplv

12G 12G 4.2M 100% /tmp

hostname:/home/oracle$

hostname:/home/oracle$ lsof +D /tmp

lsof: WARNING: can't opendir(/tmp/lost+found): Permission denied

COMMAND PID USER FD TYPE DEVICE SIZE/OFF NODE NAME

dg4msql 7319 oracle 10u REG 253,26 11290202112 39 /tmp/qe9vfnzS

java 18849 oracle mem REG 253,26 32768 1441794 /tmp/hsperfdata_oracle/18849

hostname:/home/oracle$

hostname:/home/oracle$ rm -fv /tmp/qeOGiDuK

removed `/tmp/qeOGiDuK'

hostname:/home/oracle$

The process dg4msql (Oracle Database Gateway for SQL Server) used up 100% tmp space.

Temp space for dg4msql is analogous to temporary tablespace for Oracle database.

There can be Multiple Temporary Tablespaces in Oracle and why not multiple tmp directory for OS!

Solution provided by Oracle support.

Those temp files starting with “qe*” are a necessity by the driver and they will be created nevertheless.

One option to avoid to generate in /tmp files if you are running out of space is to set the variable QE_TMPDIR.

This variabe would only change the location where the tmp files are stored. In case you have a partition with more space than the default location of /tmp

You can set this parameter choosing one of the below options –

A) – Set the env variable in the gateway init file specifying the new location of the files, adding the below parameter

HS_FDS_CONNECT_INFO=…

:

#

SET PRIVATE QE_TMPDIR=/var/tmp

B) – Or place it into the listener.ora entry for the gateway using the ENV= parameter, for example:

(SID_DESC=

(SID_NAME=sid_name)

(ORACLE_HOME=)

(ENVS=QE_TMPDIR=/var/tmp,LD_LIBRARY_PATH=/dg4msql/driver/lib:/lib)

(PROGRAM=dg4msql)

)

Either specifying in the gateway init file or in the listener.ora file, stop/start the listener and monitor again.

One suggestion is to check the contents of the QE_TMPDIR or /tmp once a week and see if there are files there, how big they are and how old they are.

If the contents of the QE_TMPDIR or /tmp seem empty and suddenly it fills up; then it is one of your queries that is passing thru a lot of data.

You would need to have that query or session identified and check if there is one way you can make it spread the work a little bit, using a PL/SQL stored procedure that does a few commits along the way, releasing some space.

Closing thought, FS and DB TEMP space should be the same size to prevent issues.

The Beginning Of The End

Started blogging June 19, 2007.

Thank you for reading!

No better way to end it than the year of Dragon [Wood] (Chinese Horoscope).

Be well; be kind; be happy.

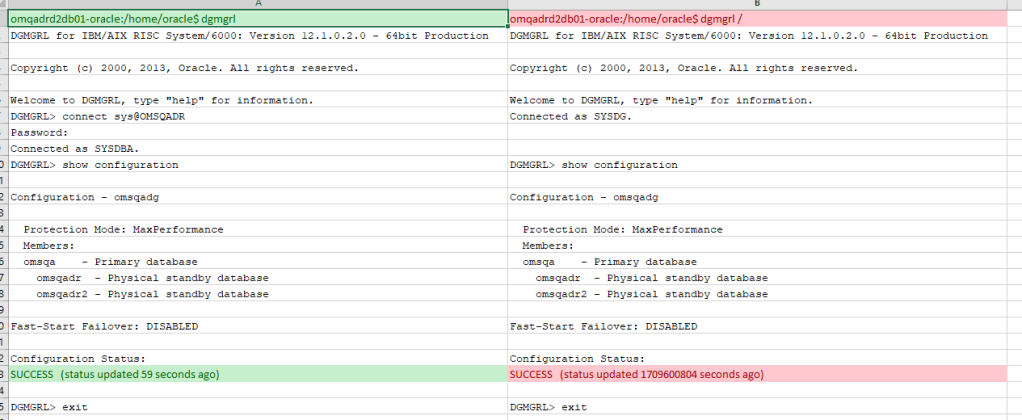

SRDC – Collect Data Guard Diagnostic Information (Doc ID 2219763.1)

Been in DataGuard HELL and Doc ID 2219763.1 is a good starting point for troubleshooting.

Many SR’s opened without any success. Oracle suppport suggest to recreate controlfile – YIKES!

Basically, RMAN backup is being performed from standby.

From primary RMAN deletes archivelog to prevent fill up.

My unnderstanding, if archivelogs are in FRA, then cleanup shoud be automatic provided there are no BUGS.

Solution for QA – RMAN delete archivelogs without connecting to catalog at primary

and RMAN backups connecting to catalog at standby. Not sure if there are any repercussions.

RMAN Archivelog Deletion Errors – 12.1.0.2.0 – Script below seems overly complicated.

Any suggestions or ideas? TIA.

16> connect target *

17> connect catalog *

18>

19> crosscheck archivelog all;

20> delete noprompt archivelog until time 'sysdate-4/24';

21> list archivelog all;

22>

23> run {

24> allocate channel c1 device type disk;

25> SQL "alter database backup controlfile to trace as

25> ''/tmp/ctl_@_trace.sql'' reuse resetlogs";

26> SQL "create pfile=''/tmp/init@.ora'' from spfile";

27> release channel c1;

28> }

29> show all;

30> crosscheck archivelog all;

31> crosscheck backup;

32> delete noprompt obsolete;

33> delete noprompt expired backup;

34> resync catalog from db_unique_name all;

35>

RMAN-06216: WARNING: db_unique_name mismatch - 7 objects could not be updated

RMAN-06218: List of objects requiring same operation on database with db_unique_name SID_DR

RMAN-06211: ==========================

RMAN-06212: Object Type Filename/Handle

RMAN-06213: --------------- ---------------------------------------------------

RMAN-06214: Backup Piece c-1072717639-20240124-03

RMAN-06214: Backup Piece c-1072717639-20240124-05

RMAN-06214: Backup Piece c-1072717639-20240124-07

RMAN-06214: Backup Piece c-1072717639-20240125-01

RMAN-06214: Backup Piece c-1072717639-20240125-03

RMAN-06214: Backup Piece c-1072717639-20240125-05

RMAN-06214: Backup Piece c-1072717639-20240126-00

RMAN-06216: WARNING: db_unique_name mismatch - 6 objects could not be updated

RMAN-06218: List of objects requiring same operation on database with db_unique_name SID_DR

RMAN-06211: ==========================

RMAN-06212: Object Type Filename/Handle

RMAN-06213: --------------- ---------------------------------------------------

RMAN-06214: Backup Piece c-1072717639-20240124-02

RMAN-06214: Backup Piece c-1072717639-20240125-00

RMAN-06214: Backup Piece c-1072717639-20240125-02

RMAN-06214: Backup Piece c-1072717639-20240125-04

RMAN-06214: Backup Piece c-1072717639-20240125-07

RMAN-06214: Backup Piece c-1072717639-20240126-01

RMAN-06207: WARNING: 6 objects could not be deleted for SBT_TAPE channel(s) due

RMAN-06208: to mismatched status. Use CROSSCHECK command to fix status

RMAN-06210: List of Mismatched objects

RMAN-06211: ==========================

RMAN-06212: Object Type Filename/Handle

RMAN-06213: --------------- ---------------------------------------------------

RMAN-06214: Backup Piece c-1072717639-20240124-02

RMAN-06214: Backup Piece c-1072717639-20240124-05

RMAN-06214: Backup Piece c-1072717639-20240125-00

RMAN-06214: Backup Piece c-1072717639-20240125-02

RMAN-06214: Backup Piece c-1072717639-20240125-04

RMAN-06214: Backup Piece c-1072717639-20240125-07

RMAN-06207: WARNING: 6 objects could not be deleted for SBT_TAPE channel(s) due

RMAN-06208: to mismatched status. Use CROSSCHECK command to fix status

RMAN-06210: List of Mismatched objects

RMAN-06211: ==========================

RMAN-06212: Object Type Filename/Handle

RMAN-06213: --------------- ---------------------------------------------------

RMAN-06214: Backup Piece c-1072717639-20240124-03

RMAN-06214: Backup Piece c-1072717639-20240124-07

RMAN-06214: Backup Piece c-1072717639-20240125-01

RMAN-06214: Backup Piece c-1072717639-20240125-03

RMAN-06214: Backup Piece c-1072717639-20240125-05

RMAN-06214: Backup Piece c-1072717639-20240126-00

Remove All Vagrant Boxes

I have been struggling with Vagrant Boxes for Microsoft Windows 11 Pro.

Destroy them all and start over again.

pegasus@Greek MINGW64 ~

$ vagrant box list | cut -f 1 -d ' ' | xargs -L 1 vagrant box remove -f

Removing box 'bento/ubuntu-18.04' (v202303.13.0) with provider 'virtualbox'...

Removing box 'bigdeal/mysql57' (v18.3.4) with provider 'virtualbox'...

Removing box 'centos/7' (v2004.01) with provider 'virtualbox'...

Removing box 'cosm/centos7_mysql5.6' (v5.6.51.20210213) with provider 'virtualbox'...

Removing box 'dtest/mysql-ce-5.6' (v0.1.0) with provider 'virtualbox'...

Removing box 'oraclebase/oracle-7' (v2023.10.18) with provider 'virtualbox'...

Removing box 'oraclelinux/7' (v7.9.517) with provider 'virtualbox'...

Removing box 'stevobengtson/vt-mysql-5.6' (v1.1) with provider 'virtualbox'...

Removing box 'thanh_lh/lam-php-5.3-mysql-5.6-centos-6.8-ecube-2-cfg' (v0.0.2) with provider 'virtualbox'...

Removing box 'ubuntu/trusty64' (v20190514.0.0) with provider 'virtualbox'...

pegasus@Greek MINGW64 ~

$ systeminfo

Host Name: GREEK

OS Name: Microsoft Windows 11 Pro

OS Version: 10.0.22631 N/A Build 22631

OS Manufacturer: Microsoft Corporation

OS Configuration: Standalone Workstation

OS Build Type: Multiprocessor Free

Registered Owner: pegasus

Registered Organization: N/A

Product ID: 00330-54193-28148-AAOEM

Original Install Date: Fri/6/9/2023, 7:23:01 PM

System Boot Time: Tue/1/9/2024, 7:03:46 PM

System Manufacturer: Micro Computer(HK) Tech Limited

System Model: Neptune series(i9)

System Type: x64-based PC

Processor(s): 1 Processor(s) Installed.

[01]: Intel64 Family 6 Model 154 Stepping 3 GenuineIntel ~2500 Mhz

BIOS Version: American Megatrends International, LLC. 1.0B, Tue/2/21/2023

Windows Directory: C:\WINDOWS

System Directory: C:\WINDOWS\system32

Boot Device: \Device\HarddiskVolume1

System Locale: en-us;English (United States)

Input Locale: en-us;English (United States)

Time Zone: (UTC-08:00) Pacific Time (US & Canada)

Total Physical Memory: 32,545 MB

Available Physical Memory: 19,213 MB

Virtual Memory: Max Size: 34,593 MB

Virtual Memory: Available: 19,965 MB

Virtual Memory: In Use: 14,628 MB

Page File Location(s): C:\pagefile.sys

Domain: WORKGROUP

Logon Server: \\GREEK

Hotfix(s): 4 Hotfix(s) Installed.

[01]: KB5033920

[02]: KB5027397

[03]: KB5034123

[04]: KB5032393

Network Card(s): 8 NIC(s) Installed.

[01]: Windscribe VPN

Connection Name: Local Area Connection

Status: Media disconnected

[02]: Windscribe Windtun420

Connection Name: Local Area Connection 2

Status: Media disconnected

[03]: Intel(R) Ethernet Controller (3) I225-V

Connection Name: Ethernet

DHCP Enabled: Yes

DHCP Server: 192.168.1.254

IP address(es)

[01]: 192.168.1.214

[02]: fe80::1a81:c46a:bf:55e5

[04]: Intel(R) Wi-Fi 6E AX210 160MHz

Connection Name: Wi-Fi

Status: Media disconnected

[05]: VirtualBox Host-Only Ethernet Adapter

Connection Name: Ethernet 2

DHCP Enabled: No

IP address(es)

[01]: 192.168.56.1

[02]: fe80::7fb6:5404:2b52:1346

[06]: VirtualBox Host-Only Ethernet Adapter

Connection Name: Ethernet 3

DHCP Enabled: No

IP address(es)

[01]: 192.168.100.1

[02]: fe80::f854:a563:d547:99f2

[07]: VirtualBox Host-Only Ethernet Adapter

Connection Name: Ethernet 4

DHCP Enabled: No

IP address(es)

[01]: 192.168.33.1

[02]: fe80::91b0:da47:2c8f:e543

[08]: WireGuard Tunnel

Connection Name: WindscribeWireguard

DHCP Enabled: No

IP address(es)

[01]: 100.73.168.139

Hyper-V Requirements:

A hypervisor has been detected.

Features required for Hyper-V will not be displayed.

pegasus@Greek MINGW64 ~

$Using nfsstat To Find NFS Mounts

What’s so diffificult about finding NFS mount points?

Nothing except for when there are 23+ hosts with different OS flavors and versions.

12.1.0.2|RHEL 6.10

12.1.0.2|RHEL 7.5

11.2.0.4|AIX 7.1

12.2.0.1|AIX 7.2

Lucky for me, I was able to find nfsstat which worked for all the versions above.

Just run nfsstat -m to find all NFS mounts.

nfsstat

Purpose:

Displays statistical information ab1out the Network File System ( ) and Remote Procedure Call (RPC) calls.

Syntax:

/usr/sbin/nfsstat [ -@ WparName ] [ -c ] [ -d ] [ -s ] [ -n ] [ -r ] [ -m ] [ -4 ] [ -z ] [ -t] [-b] [ -g ]

Example: 12.1.0.2.0 (RHEL 7.5)

nfsstat -m

/tmp/Admin-Out from 172.31.250.60:/vol/vol4/Admin-Out

RAC Off – Relinking the RAC Option Worked

Starting RAC database with srvctl resulted with error below.

ORA-00439: feature not enabled: Real Application Clusters

Rac On / Rac Off – Relinking the RAC Option (Doc ID 211177.1)

RAC OFF

1. Login as the Oracle software owner and shutdown all database instances on all nodes in the cluster.

2. cd $ORACLE_HOME/rdbms/lib

3. make -f ins_rdbms.mk rac_off

If this step did not fail with fatal errors, proceed to step 4.

4. make -f ins_rdbms.mk ioracle

Note: If these steps fail with a Fatal Error, then you will need to open a Service Request and submit these errors to Oracle Support for analysis.

================================================================================

### STOP Database

================================================================================

[oracle@ol7-121-rac1 ~]$ srvctl stop database -d cdbrac

[oracle@ol7-121-rac1 ~]$ srvctl status database -d cdbrac -v

Instance cdbrac1 is not running on node ol7-121-rac1

Instance cdbrac2 is not running on node ol7-121-rac2

[oracle@ol7-121-rac1 ~]$

================================================================================

### make -f ins_rdbms.mk rac_off

================================================================================

[oracle@ol7-121-rac1 ~]$ cd $ORACLE_HOME/rdbms/lib

[oracle@ol7-121-rac1 lib]$ make -f ins_rdbms.mk rac_off

rm -f /u01/app/oracle/product/12.1.0.2/dbhome_1/lib/libskgxp12.so

cp /u01/app/oracle/product/12.1.0.2/dbhome_1/lib//libskgxpg.so /u01/app/oracle/product/12.1.0.2/dbhome_1/lib/libskgxp12.so

rm -f /u01/app/oracle/product/12.1.0.2/dbhome_1/lib/libskgxn2.so

cp /u01/app/oracle/product/12.1.0.2/dbhome_1/lib//libskgxns.so \

/u01/app/oracle/product/12.1.0.2/dbhome_1/lib/libskgxn2.so

/usr/bin/ar d /u01/app/oracle/product/12.1.0.2/dbhome_1/rdbms/lib/libknlopt.a kcsm.o

/usr/bin/ar cr /u01/app/oracle/product/12.1.0.2/dbhome_1/rdbms/lib/libknlopt.a /u01/app/oracle/product/12.1.0.2/dbhome_1/rdbms/lib/ksnkcs.o

[oracle@ol7-121-rac1 lib]$

================================================================================

### make -f ins_rdbms.mk ioracle

================================================================================

[oracle@ol7-121-rac1 lib]$ make -f ins_rdbms.mk ioracle

chmod 755 /u01/app/oracle/product/12.1.0.2/dbhome_1/bin

- Linking Oracle

rm -f /u01/app/oracle/product/12.1.0.2/dbhome_1/rdbms/lib/oracle

/u01/app/oracle/product/12.1.0.2/dbhome_1/bin/orald -o /u01/app/oracle/product/12.1.0.2/dbhome_1/rdbms/lib/oracle -m64 -z noexecstack -Wl,--disable-new-dtags -L/u01/app/oracle/product/12.1.0.2/dbhome_1/rdbms/lib/ -L/u01/app/oracle/product/12.1.0.2/dbhome_1/lib/ -L/u01/app/oracle/product/12.1.0.2/dbhome_1/lib/stubs/ -Wl,-E /u01/app/oracle/product/12.1.0.2/dbhome_1/rdbms/lib/opimai.o /u01/app/oracle/product/12.1.0.2/dbhome_1/rdbms/lib/ssoraed.o /u01/app/oracle/product/12.1.0.2/dbhome_1/rdbms/lib/ttcsoi.o -Wl,--whole-archive -lperfsrv12 -Wl,--no-whole-archive /u01/app/oracle/product/12.1.0.2/dbhome_1/lib/nautab.o /u01/app/oracle/product/12.1.0.2/dbhome_1/lib/naeet.o /u01/app/oracle/product/12.1.0.2/dbhome_1/lib/naect.o /u01/app/oracle/product/12.1.0.2/dbhome_1/lib/naedhs.o /u01/app/oracle/product/12.1.0.2/dbhome_1/rdbms/lib/config.o -lserver12 -lodm12 -lcell12 -lnnet12 -lskgxp12 -lsnls12 -lnls12 -lcore12 -lsnls12 -lnls12 -lcore12 -lsnls12 -lnls12 -lxml12 -lcore12 -lunls12 -lsnls12 -lnls12 -lcore12 -lnls12 -lclient12 -lvsn12 -lcommon12 -lgeneric12 -lknlopt `if /usr/bin/ar tv /u01/app/oracle/product/12.1.0.2/dbhome_1/rdbms/lib/libknlopt.a | grep xsyeolap.o > /dev/null 2>&1 ; then echo "-loraolap12" ; fi` -lskjcx12 -lslax12 -lpls12 -lrt -lplp12 -lserver12 -lclient12 -lvsn12 -lcommon12 -lgeneric12 `if [ -f /u01/app/oracle/product/12.1.0.2/dbhome_1/lib/libavserver12.a ] ; then echo "-lavserver12" ; else echo "-lavstub12"; fi` `if [ -f /u01/app/oracle/product/12.1.0.2/dbhome_1/lib/libavclient12.a ] ; then echo "-lavclient12" ; fi` -lknlopt -lslax12 -lpls12 -lrt -lplp12 -ljavavm12 -lserver12 -lwwg `cat /u01/app/oracle/product/12.1.0.2/dbhome_1/lib/ldflags` -lncrypt12 -lnsgr12 -lnzjs12 -ln12 -lnl12 -lnro12 `cat /u01/app/oracle/product/12.1.0.2/dbhome_1/lib/ldflags` -lncrypt12 -lnsgr12 -lnzjs12 -ln12 -lnl12 -lnnzst12 -lzt12 -lztkg12 -lmm -lsnls12 -lnls12 -lcore12 -lsnls12 -lnls12 -lcore12 -lsnls12 -lnls12 -lxml12 -lcore12 -lunls12 -lsnls12 -lnls12 -lcore12 -lnls12 -lztkg12 `cat /u01/app/oracle/product/12.1.0.2/dbhome_1/lib/ldflags` -lncrypt12 -lnsgr12 -lnzjs12 -ln12 -lnl12 -lnro12 `cat /u01/app/oracle/product/12.1.0.2/dbhome_1/lib/ldflags` -lncrypt12 -lnsgr12 -lnzjs12 -ln12 -lnl12 -lnnzst12 -lzt12 -lztkg12 -lsnls12 -lnls12 -lcore12 -lsnls12 -lnls12 -lcore12 -lsnls12 -lnls12 -lxml12 -lcore12 -lunls12 -lsnls12 -lnls12 -lcore12 -lnls12 `if /usr/bin/ar tv /u01/app/oracle/product/12.1.0.2/dbhome_1/rdbms/lib/libknlopt.a | grep "kxmnsd.o" > /dev/null 2>&1 ; then echo " " ; else echo "-lordsdo12 -lserver12"; fi` -L/u01/app/oracle/product/12.1.0.2/dbhome_1/ctx/lib/ -lctxc12 -lctx12 -lzx12 -lgx12 -lctx12 -lzx12 -lgx12 -lordimt12 -lclsra12 -ldbcfg12 -lhasgen12 -lskgxn2 -lnnzst12 -lzt12 -lxml12 -locr12 -locrb12 -locrutl12 -lhasgen12 -lskgxn2 -lnnzst12 -lzt12 -lxml12 -lgeneric12 -loraz -llzopro -lorabz2 -lipp_z -lipp_bz2 -lippdcemerged -lippsemerged -lippdcmerged -lippsmerged -lippcore -lippcpemerged -lippcpmerged -lsnls12 -lnls12 -lcore12 -lsnls12 -lnls12 -lcore12 -lsnls12 -lnls12 -lxml12 -lcore12 -lunls12 -lsnls12 -lnls12 -lcore12 -lnls12 -lsnls12 -lunls12 -lsnls12 -lnls12 -lcore12 -lsnls12 -lnls12 -lcore12 -lsnls12 -lnls12 -lxml12 -lcore12 -lunls12 -lsnls12 -lnls12 -lcore12 -lnls12 -lasmclnt12 -lcommon12 -lcore12 -laio -lons `cat /u01/app/oracle/product/12.1.0.2/dbhome_1/lib/sysliblist` -Wl,-rpath,/u01/app/oracle/product/12.1.0.2/dbhome_1/lib -lm `cat /u01/app/oracle/product/12.1.0.2/dbhome_1/lib/sysliblist` -ldl -lm -L/u01/app/oracle/product/12.1.0.2/dbhome_1/lib

test ! -f /u01/app/oracle/product/12.1.0.2/dbhome_1/bin/oracle ||\

mv -f /u01/app/oracle/product/12.1.0.2/dbhome_1/bin/oracle /u01/app/oracle/product/12.1.0.2/dbhome_1/bin/oracleO

mv /u01/app/oracle/product/12.1.0.2/dbhome_1/rdbms/lib/oracle /u01/app/oracle/product/12.1.0.2/dbhome_1/bin/oracle

chmod 6751 /u01/app/oracle/product/12.1.0.2/dbhome_1/bin/oracle

[oracle@ol7-121-rac1 lib]$

================================================================================

### srvctl config database -d cdbrac -v

================================================================================

[oracle@ol7-121-rac1 lib]$ srvctl config database -d cdbrac -v

Database unique name: cdbrac

Database name: cdbrac

Oracle home: /u01/app/oracle/product/12.1.0.2/dbhome_1

Oracle user: oracle

Spfile: +DATA/CDBRAC/PARAMETERFILE/spfile.277.1155927597

Password file: +DATA/CDBRAC/PASSWORD/pwdcdbrac.257.1155927083

Domain:

Start options: open

Stop options: immediate

Database role: PRIMARY

Management policy: AUTOMATIC

Server pools:

Disk Groups: DATA

Mount point paths:

Services:

Type: RAC

Start concurrency:

Stop concurrency:

OSDBA group: dba

OSOPER group:

Database instances: cdbrac1,cdbrac2

Configured nodes: ol7-121-rac1,ol7-121-rac2

Database is administrator managed

[oracle@ol7-121-rac1 lib]$

================================================================================

### srvctl start database -d cdbrac

================================================================================

[oracle@ol7-121-rac1 lib]$ srvctl start database -d cdbrac

PRCR-1079 : Failed to start resource ora.cdbrac.db

CRS-5017: The resource action "ora.cdbrac.db start" encountered the following error:

ORA-00439: feature not enabled: Real Application Clusters.

For details refer to "(:CLSN00107:)" in "/u01/app/oracle/diag/crs/ol7-121-rac1/crs/trace/crsd_oraagent_oracle.trc".

CRS-2674: Start of 'ora.cdbrac.db' on 'ol7-121-rac1' failed

CRS-2632: There are no more servers to try to place resource 'ora.cdbrac.db' on that would satisfy its placement policy

[oracle@ol7-121-rac1 lib]$RAC OPTION OFF after upgrade to 12.1

Oh, The Irony!

Reference Doc ID 2494637.1 has all the details.

dba_registry shows OFF while database parameters show ON.

SQL> select comp_name,version,status from dba_registry;

COMP_NAME VERSION STATUS

Oracle Real Application Clusters 12.1.0.2.0 OPTION OFF

Connected to:

Oracle Database 12c Enterprise Edition Release 12.1.0.2.0 - 64bit Production

With the Partitioning, Real Application Clusters, Automatic Storage Management, OLAP,

Advanced Analytics and Real Application Testing options

SQL> show parameter cluster

NAME TYPE VALUE

------------------------------------ ----------- ------------------------------

cluster_database boolean TRUE

cluster_database_instances integer 2

Rac On / Rac Off – Oracle Does Not Make It Easy

Working on task to convert RAC database to Single Instance database.

There are so many options and which to chose and is the best?

make -f ins_rdbms.mk rac_off and make -f ins_rdbms.mk ioracle was used.

Only issue is process ora_lmon_racdb1 is running.

Here are options and how to validate.

Rac On / Rac Off – Relinking the RAC Option (Doc ID 211177.1)

RAC OFF

- Login as the Oracle software owner and shutdown all database instances on all nodes in the cluster.

- cd $ORACLE_HOME/rdbms/lib

- make -f ins_rdbms.mk rac_off

If this step did not fail with fatal errors, proceed to step 4. - make -f ins_rdbms.mk ioracle

Note: If these steps fail with a Fatal Error, then you will need to open a Service Request and submit these errors to Oracle Support for analysis.

How to Check Whether Oracle Binary/Instance is RAC Enabled and Relink Oracle Binary in RAC (Doc ID 284785.1)

On Linux/UNIX except AIX:

ar -t $ORACLE_HOME/rdbms/lib/libknlopt.a|grep kcsm.o

To check whether a running instance is a RAC instance :

Multiple options here:

- Check sqlplus banner (Applicable to Windows):

To check whether a running instance is a RAC instance :

With the Partitioning, Real Application Clusters, OLAP and Data Mining options - Check whether lmon background process exists for the instance

- Check cluster_database parameter

SQL> show parameter cluster_database

Output “true” means it’s RAC instance but this is not reliable as a RAC instance may have cluster_database set to false during maintenance period.

Create MySQL In Vagrant Box

First, thank you to jazzfogPavel for https://github.com/jazzfog/MySql-in-Vagrant as it was straight forward.

Too tired and too lazy to explain all the steps; however, the output should be self explanatory.

pegasus@Greek MINGW64 /c/vagrant/MySql-in-Vagrant (master)

$ ls -al

ls -al

total 25

drwxr-xr-x 1 pegasus 197121 0 Nov 19 06:37 .

drwxr-xr-x 1 pegasus 197121 0 Nov 19 06:34 ..

drwxr-xr-x 1 pegasus 197121 0 Nov 19 06:34 .git

-rw-r--r-- 1 pegasus 197121 31 Nov 19 06:34 .gitignore

drwxr-xr-x 1 pegasus 197121 0 Nov 19 06:35 .vagrant

drwxr-xr-x 1 pegasus 197121 0 Nov 19 06:37 MysqlData

-rw-r--r-- 1 pegasus 197121 635 Nov 19 06:34 Vagrantfile

drwxr-xr-x 1 pegasus 197121 0 Nov 19 06:34 provision

-rw-r--r-- 1 pegasus 197121 3010 Nov 19 06:34 readme.md

pegasus@Greek MINGW64 /c/vagrant/MySql-in-Vagrant (master)

$

======================================================================

pegasus@Greek MINGW64 /c/vagrant

$ git clone https://github.com/jazzfog/MySql-in-Vagrant

Cloning into 'MySql-in-Vagrant'...

remote: Enumerating objects: 32, done.

remote: Total 32 (delta 0), reused 0 (delta 0), pack-reused 32

Receiving objects: 100% (32/32), 5.32 KiB | 5.32 MiB/s, done.

Resolving deltas: 100% (12/12), done.

pegasus@Greek MINGW64 /c/vagrant

$ ls

MySql-in-Vagrant/ vagrant/ vagrant-projects/

pegasus@Greek MINGW64 /c/vagrant

$ cd MySql-in-Vagrant/

pegasus@Greek MINGW64 /c/vagrant/MySql-in-Vagrant (master)

$ ls

Vagrantfile provision/ readme.md

pegasus@Greek MINGW64 /c/vagrant/MySql-in-Vagrant (master)

$ vagrant up

Bringing machine 'default' up with 'virtualbox' provider...

==> default: Importing base box 'ubuntu/trusty64'...

vagrant@vagrant-ubuntu-trusty-64:~$ mysql -V

mysql Ver 14.14 Distrib 5.5.62, for debian-linux-gnu (x86_64) using readline 6.3

vagrant@vagrant-ubuntu-trusty-64:~$ mysql -u root

Welcome to the MySQL monitor. Commands end with ; or \g.

Your MySQL connection id is 37

Server version: 5.5.62-0ubuntu0.14.04.1 (Ubuntu)

Copyright (c) 2000, 2018, Oracle and/or its affiliates. All rights reserved.

Oracle is a registered trademark of Oracle Corporation and/or its

affiliates. Other names may be trademarks of their respective

owners.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

mysql> SELECT version();

+-------------------------+

| version() |

+-------------------------+

| 5.5.62-0ubuntu0.14.04.1 |

+-------------------------+

1 row in set (0.00 sec)

mysql> STATUS;

--------------

mysql Ver 14.14 Distrib 5.5.62, for debian-linux-gnu (x86_64) using readline 6.3

Connection id: 37

Current database:

Current user: root@localhost

SSL: Not in use

Current pager: stdout

Using outfile: ''

Using delimiter: ;

Server version: 5.5.62-0ubuntu0.14.04.1 (Ubuntu)

Protocol version: 10

Connection: Localhost via UNIX socket

Server characterset: latin1

Db characterset: latin1

Client characterset: utf8

Conn. characterset: utf8

UNIX socket: /var/run/mysqld/mysqld.sock

Uptime: 5 min 13 sec

Threads: 1 Questions: 113 Slow queries: 0 Opens: 48 Flush tables: 1 Open tables: 41 Queries per second avg: 0.361

--------------

mysql> SHOW VARIABLES LIKE "%version%";

+-------------------------+-------------------------+

| Variable_name | Value |

+-------------------------+-------------------------+

| innodb_version | 5.5.62 |

| protocol_version | 10 |

| slave_type_conversions | |

| version | 5.5.62-0ubuntu0.14.04.1 |

| version_comment | (Ubuntu) |

| version_compile_machine | x86_64 |

| version_compile_os | debian-linux-gnu |

+-------------------------+-------------------------+

7 rows in set (0.00 sec)

mysql>

Modify Connection For Oracle Database Gateway To SQL Server

Google did not help me on this one and had to RTFM. Hope this helps you.

During installation, connection to SQL Server was defined; now, another SQL Server should be used instead.

Need to modify initdg4msql.ora where $ORACLE_HOME is the Gateway Home.

db01-oracle:/home/oracle$ cat $ORACLE_HOME/dg4msql/admin/initdg4msql.ora

# This is a customized agent init file that contains the HS parameters

# that are needed for the Database Gateway for Microsoft SQL Server

#

# HS init parameters

#

# Michael Dinh : Oct 25, 2023

# HS_FDS_CONNECT_INFO=[VMXXENTPOCD01]:1434//TEST_BACKUP

HS_FDS_CONNECT_INFO=[AVSFHEQDDB01]:1433//LATEST

# alternate connect format is hostname/serverinstance/databasename

HS_FDS_TRACE_LEVEL=OFF

HS_FDS_RECOVERY_ACCOUNT=RECOVER

HS_FDS_RECOVERY_PWD=RECOVER

db01-oracle:/home/oracle$

Create database link from Oracle to SQLServer database.

SQL> CREATE PUBLIC DATABASE LINK SQLSERVER1 CONNECT TO TESTADMIN IDENTIFIED BY "latest123" USING 'dg4msql';

Database link created.

SQL> select * from LA@SQLSERVER1;

select * from LA@SQLSERVER1

*

ERROR at line 1:

ORA-28500: connection from ORACLE to a non-Oracle system returned this message:

[Oracle][ODBC SQL Server Wire Protocol driver][Microsoft SQL Server]The SELECT permission was denied on the object 'LA', database

'LaTest', schema 'dbo'. {42000,NativeErr = 229}[Oracle][ODBC SQL Server Wire Protocol driver][Microsoft SQL Server]The metadata

could not be determined because every code path results in an error; see previous errors for some of these. {HY000,NativeErr =

11529}

ORA-02063: preceding 2 lines from SQLSERVER1

SQL>

For SQLServer, provided dbreader role to TestAdmin. I am not aware of all the privleges as another team is managing SQLServer.

SQL> col name for a15

SQL> col address for a15

SQL> col zip for a15

SQL> select * from LA@SQLSERVER1;

Name Address Zip

--------------- --------------- ---------------

Michael Address A 123-4567

Clyde Address B 345-6789

Mahesh Address C 456-6789

SQL>

Troubleshooting Oracle Database Gateway Installation : INS -07003 Unexpected Error Occured While Accessing The Bean Store.

Installing 11.2.0.4 Oracle Database Gateway for Database 11.2.0.4 failed with Error In Invoking Target ‘idg4msql’ Of Makefile $OH/rdbms/lib/ins_rdbms.mk’.

Create SR and oracle support advise to use 12cR2 version.

Installing 12cR2 Oracle Database Gateway for Database 11.2.0.4 failed with [INS-07003] Unexpected error occurred while accessing the bean store.

Finally was able to get pass the error and hopefully no further issues.

The final trick is export PS1=”\u@\h:\${ORACLE_SID}:\${PWD}\n$ “

oracle@db01::/home/oracle

$ source unset_vars.sh

oracle@db01::/home/oracle

$ cd /u01/app/oracle/gateways

oracle@db01::/u01/app/oracle/gateways

$ ./runInstaller

Starting Oracle Universal Installer…

Checking Temp space: must be greater than 415 MB. Actual 4967 MB Passed

Checking swap space: must be greater than 150 MB. Actual 16095 MB Passed

Checking monitor: must be configured to display at least 256 colors. Actual 1 6777216 Passed

Preparing to launch Oracle Universal Installer from /tmp/OraInstall2023-10-21_08 -34-22AM. Please wait …

oracle@db01::/u01/app/oracle/gateways

$

Basically, the enviroment has way too many variables which needed to be unset before excuting runInstaller

oracle@db01::/home/oracle

$ cat unset_vars.sh

unset cyan

unset smul

unset magenta

unset smso

unset reset

unset rmso

unset rmul

unset dim

unset white

unset green

unset black

unset yellow

unset red

unset bold

unset blue

unset rev

unset white

unset green

unset black

unset NLS_LANG

unset LS_COLORS

unset NLS_DATE_FORMAT

export PS1="\u@\h:\${ORACLE_SID}:\${PWD}\n$ "

oracle@db01::/home/oracle

$

Drop RAC Database Using RMAN

As part of data center consolidation, some databases are no longer required and need to be dropped.

I have previouly posted about dropping databases; however, it was single instance vs RAC.

### Check status of database:

db01-oracle:/home/oracle$ srvctl status database -d loadtest -v

Database loadtest is running. Instance status: Open.

db01-oracle:/home/oracle$

### Modify cluster database parameters:

SQL> show parameter cluster_database

NAME TYPE VALUE

------------------------- ----------- ------------

cluster_database boolean FALSE

cluster_database_instances integer 1

SQL> show parameter db_name

NAME TYPE VALUE

------------------------- ----------- ------------

db_name string loadtest

### Startup force mount restrict:

SQL> startup force mount restrict;

ORACLE instance started.

Total System Global Area 2.1475E+10 bytes

Fixed Size 3795712 bytes

Variable Size 1.2885E+10 bytes

Database Buffers 8522825728 bytes

Redo Buffers 63311872 bytes

Database mounted.

!!! Verify logins=RESTRICTED and parallel=NO !!!

SQL> select logins, parallel from v$instance;

LOGINS PAR

---------- ---

RESTRICTED NO

SQL>

### RMAN: drop database including backups ###

/home/oracle$ rman target /

Recovery Manager: Release 12.1.0.2.0 - Production on Mon Sep 25 11:41:45 2023

Copyright (c) 1982, 2014, Oracle and/or its affiliates. All rights reserved.

connected to target database: loadtest (DBID=983159180, not open)

RMAN> drop database including backups;

database name is "loadtest" and DBID is 983159180

Do you really want to drop all backups and the database (enter YES or NO)?

nected to target database: loadtest (DBID=983159180, not open)

RMAN> drop database including backups;

database name is "loadtest" and DBID is 983159180

Do you really want to drop all backups and the database (enter YES or NO)? yes

using target database control file instead of recovery catalog

allocated channel: ORA_SBT_TAPE_1

channel ORA_SBT_TAPE_1: SID=101 device type=SBT_TAPE

channel ORA_SBT_TAPE_1: Data Protection for Oracle: version 7.1.3.0

allocated channel: ORA_SBT_TAPE_2

channel ORA_SBT_TAPE_2: SID=148 device type=SBT_TAPE

channel ORA_SBT_TAPE_2: Data Protection for Oracle: version 7.1.3.0

allocated channel: ORA_DISK_1

channel ORA_DISK_1: SID=199 device type=DISK

allocated channel: ORA_DISK_2

channel ORA_DISK_2: SID=248 device type=DISK

..............................

deleted archived log

archived log file name=+ORFLASHBK/loadtest/ARCHIVELOG/2023_09_22/thread_1_seq_150008.8873.1148227803 RECID=150008 STAMP=1148227802

Deleted 8 objects

..............................

database name is "loadtest" and DBID is 983159180

database dropped

RMAN>